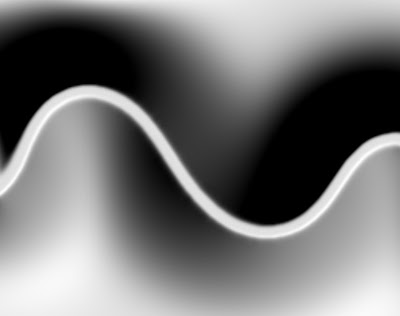

...and from it infer slope information for the land:

(Click images for larger versions.) This slope information can then be used to guide roads beside or across the river, a la Interactive Procedural Street Modeling.

I do this by setting the land squares to a high elevation and the river squares to zero, and then blurring the landscape while keeping the river squares clamped at zero. I am also experimenting with adding in a small elevation boost to the land before each blur iteration. This helps keep the landscape from flattening out, especially inside tight loops of the river:

Note that this slope information isn't necessarily indicative of how steep the actual landscape would be. I simply need good slope information to guide road placement.

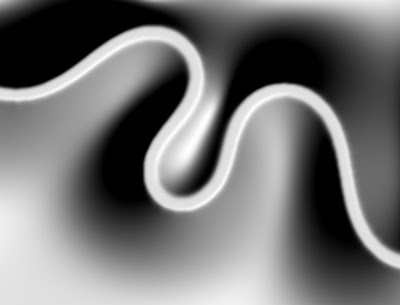

With gradient information in hand it's possible to trace out roads that follow the terrain (click for larger version):

The elevation boost on each blur step is a bad idea, I think. It tends to cause hills to crease more, which makes level roads turn sharply when they go around the crease. Hill-climbing roads also turn sharply and go up the crest when you have sharp hills. I think I prefer rounded hills so that as roads are set further back from the river they follow it less precisely. You can see this at work in the screenshot above.

I am not yet spacing the roads apart intelligently; I just generated a bunch of maps and picked the best-looking example to show above. The SIGGRAPH paper I linked to above has a reference to another paper that describes how to space the roads apart, though, so that should be relatively easy to add.

My plan is to have a few major highways that follow the terrain like this, and then fill in between with grid-aligned roads, since the game is tile-based.

At the moment my blur is extremely expensive. Because of the clamping it's hard to use a very big filter kernel (I'm using 3x3 currently) so it takes many iterations to propagate the blurring over large distances. My filter kernel looks like:

1 2 1

2 4 2

1 2 1When I'm dealing with Gaussian blurring I wish I'd stayed in grad school; the faculty at UNC worked pretty hard to hammer this stuff in. I feel like I'm twiddling a lot of knobs without wholly grasping how it all fits together.

I think I might be able to make it go much faster and still maintain the clamping behavior. I would run one iteration of smoothing at full resolution, then down-sample by a factor of two in each dimension. For the downsampled image, any pixel that was based in part on a clamped pixel in the source image would be marked as clamped. The process of blurring, downsampling, and clamping would be repeated for several more levels. Then I would go through a reverse process of upsampling with interpolation to arrive at a heavily-blurred version of the source image.

Obviously information is being lost by the downsampling, though, that isn't theoretically being lost by the blurring itself; I would like to understand this process better.

No comments:

Post a Comment